AI Agents #1: (Ground)breaking LLMs?

OpenAI's DevDay this week was a great leap forward in abstracting and accelerating the deployment of LLMs for more complex use cases for developers. Especially, the focus on AI agents, RAG, and the token cost slashing left many to wonder (and joke about) how it would leave the current ecosystem of startups that raised money on the premise of differentiating among any of the latter.

All jokes aside, amidst these exciting updates, a critical component was notably under-discussed: security. This gap was conspicuous, with the burden of addressing security issues seemingly shifting to Microsoft. The brief mention of a new feature around output enforcement, reminiscent of the OS library of Guardrails AI, seemed almost negligible amidst the broader updates that were announced. Especially the security in the context of AI agents, given the rapid pace at which these will likely be implemented downstream gave us a lot of food for thought. As Simon Willison put it, the ease with which OpenAI's outputs could be exploited is a daunting prospect. We agree with this and expect that companies or engineers have not thoroughly thought that through yet.

As AI agents will enter the fray, and start to interact with other systems and untrusted sources of data, security will become ever more critical. Exactly for that reason, we decided to take a closer look at what AI agents are, and how their adoption without security in mind will significantly extend the complexities of attacks and their respective severity.

So what are AI Agents?

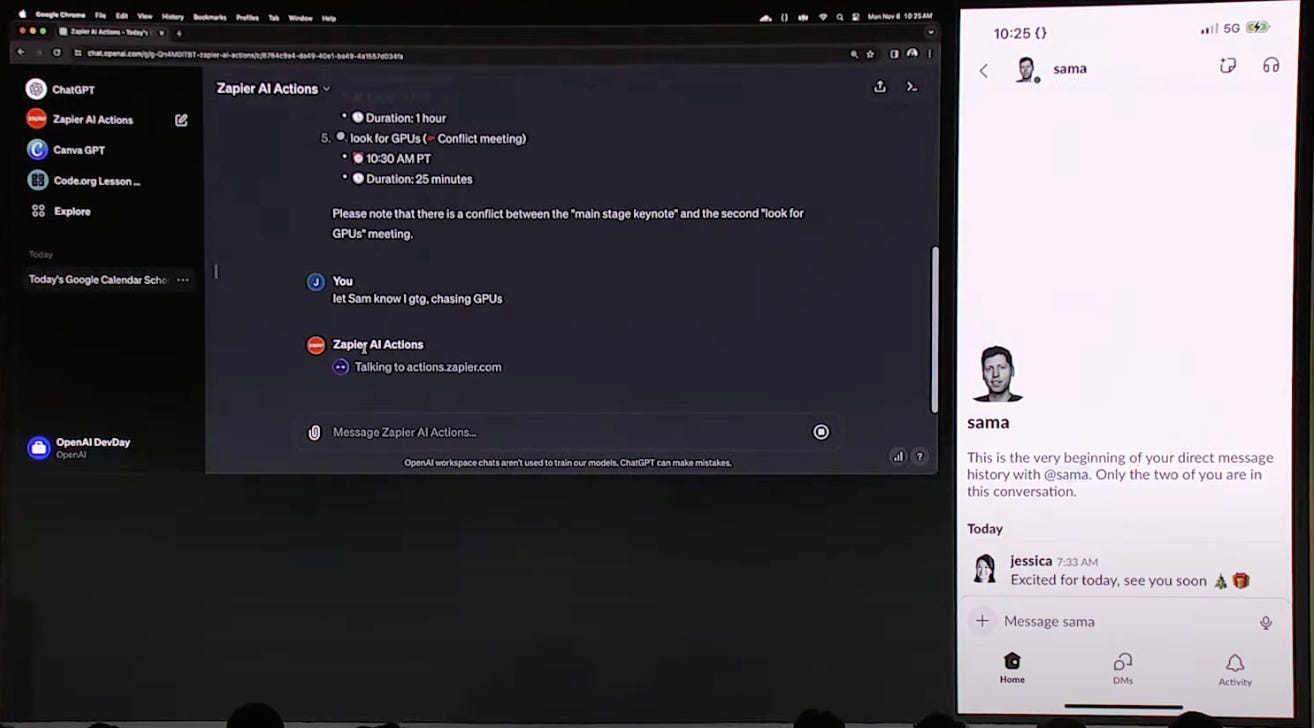

At their core, AI agents are automated entities designed to perform tasks with minimal human intervention. To put it simply, AI agents transcend simple input/output execution in LLMs and instead have access to a set of tools through plugins (i.e. Zapier, Gmail, etc.) which can help it with fetching external data, making actions (i.e. sending emails, messages), or running computations. These tools/plugins are not just add-ons but are integral components that expand the AI agents' ability to interface with external systems, thereby enriching their applicability. In essence, the LLM can then solve tasks using any of these tools based on an initial prompt and autonomously determine which tool it uses next to make progress toward solving the problem. Therefore, AI agents bring the promise of LLMs venturing into realms of nuanced interactions and multifaceted functionalities. However, this integration brings forth a host of security challenges, turning these plugins into potential gateways for exploitation.

As highlighted in OpenAI’s DevDay, the most basic AI agents built with GPT can be used to interact with your calendar with automated scheduling, send messages over Slack, or create flyer designs with Canva. While the latter is only a glimpse of the realm of possibilities for AI agents, OpenAI’s GPT Store will likely drive more advanced use cases with plugins for online purchases, managing your bank account, or configuring your AWS infrastructure. As Open AI’s LLMs are still heavily susceptible to both direct and indirect prompt injections, the combination of AI agents with untrusted sources of data will lead to a plethora of exploitations in the coming months. As Johann Rehberger (Wunderwuzzi23) predicts, “Random webpages and data will hijack your AI, steal your stuff, and spend your money”.

In the next article, we will unpack the vulnerabilities and security threats that go hand in hand with AI agents.

Subscribe to stay tuned for more.